Artificial neural networks in various configurations are showing great potential in a number of applications.

Multi-Layer Neural Networks

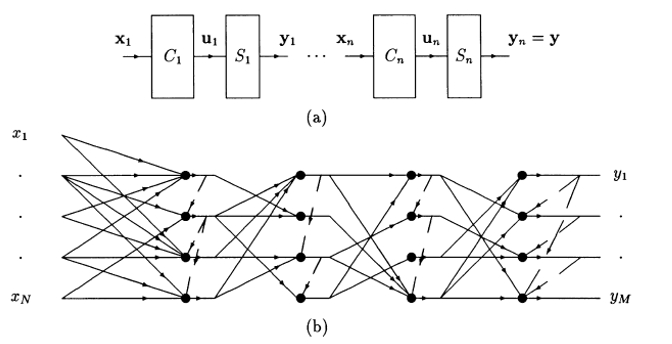

A multi-layer neural network is built using an information-preserving criterion.The structure provides a potentially powerful substrate for classificationa and filtering.

- .A.N. Palmieri, M. Baldi, A. Buonanno and G. Di Gennaro, "Information-Preserving Networks and the Mirrored Transform," in Proc. XXIX IEEE International Workshop on Machine Learning for Signal Processing (MLSP2019), Pittsburgh, PA, USA, Oct. 13–16, 2019. DOI: 10.1109/MLSP.2019.8918805

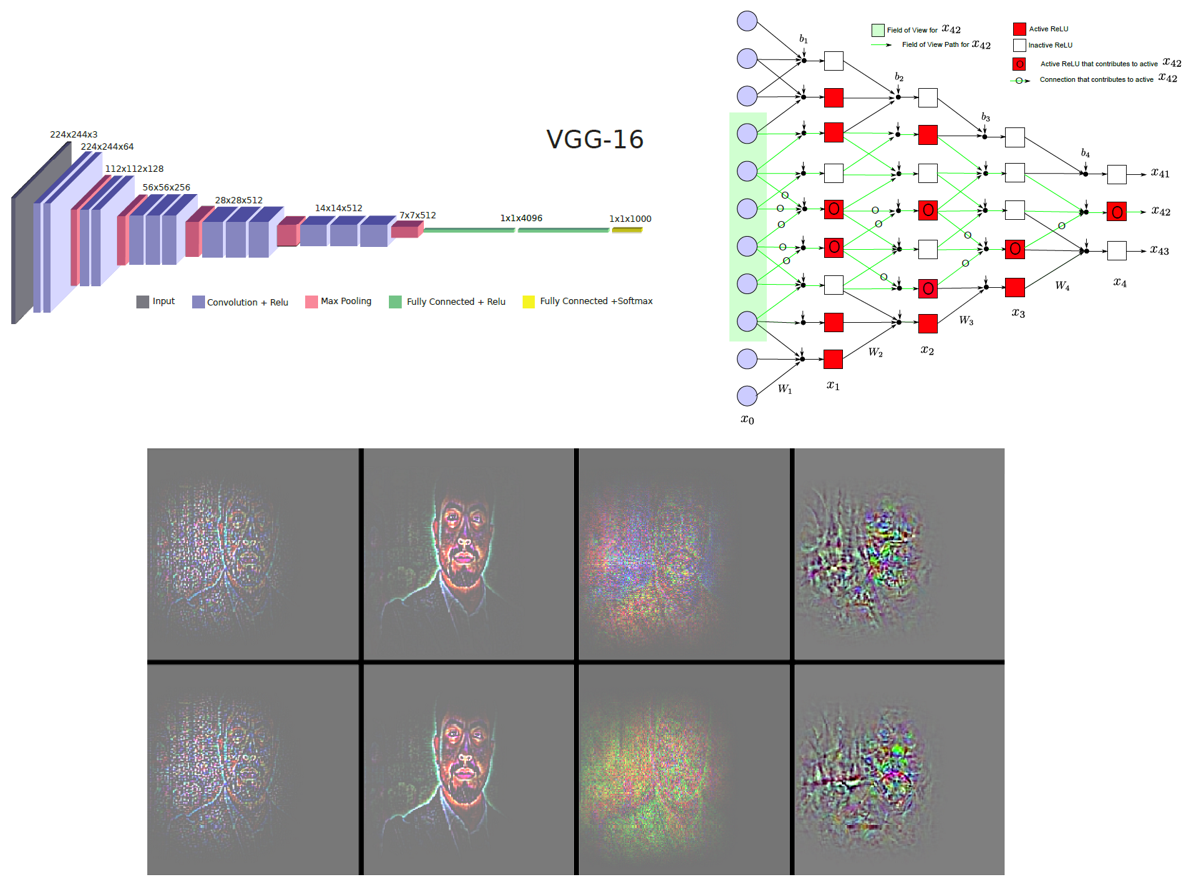

Detailed analyses of the activations in a popular multi-layer network, revel the intrinsec workings of the system in providing progressively high-level embeddings of the information contained in the sensed image.

- F.A.N. Palmieri, M. Baldi, A. Buonanno, G. Di Gennaro and F. Ospedale, "Probing a Deep Neural Network," in Neural Approaches to Dynamics of Signal Exchanges (Smart Innovation, Systems and Technologies 151), A. Esposito, M.Faundez-Zanuy, F.C. Morabito and E. Pasero, Eds., Springer, 2019, pp. 201–211. DOI: 10.1007/978-981-13-8950-4_19 - Also Presented at the 28th Italian Workshop on Neural Networks (WIRN), June 13-15, 2018.

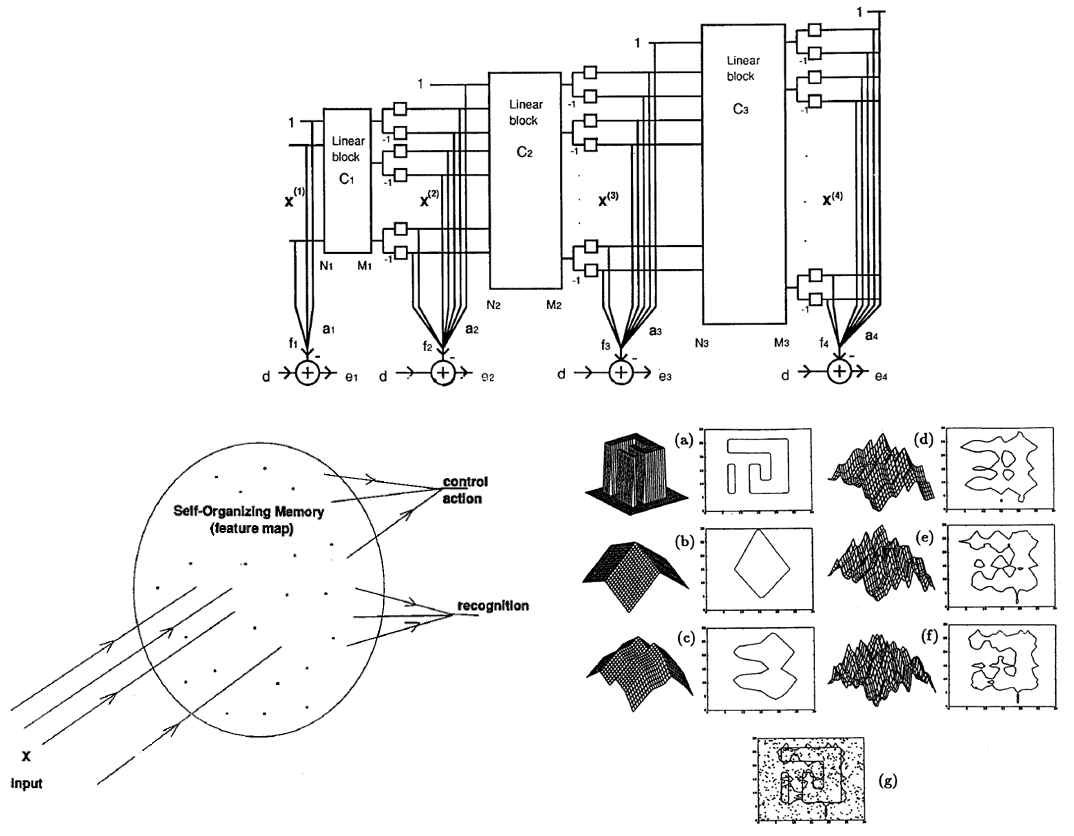

A cascade of linear layers, with constrained connectivity, that maximize decorrelation with an energy criterion, is shown to converge to a global Principal component analyzer.

- F. Palmieri, M. Corvino, "Principal Components Via Cascades of Block-Layers," Proceedings of IEEE International Conference on Neural Networks, Houston, TX, pp. 1035-1040, June 1997.

Efficient algorithms, obtained from a Kalman filter-like formulation, are derived for a multi-layer neural network.

- S. Shah, F. Palmieri and M. Datum, "Optimal Filtering Algorithms for Fast Learning in Feedforward Neural Networks," in Neural Networks, Vol. 5, pp. 779-787, Sept. 1992.

- F. Palmieri, S. Shah, "Fast Training of Multilayer Perceptrons Using Multilinear Parametrization," Proceedings of IEEE-INNS Int. Joint Conference on Neural Networks, Washington DC, pp. 696-699, January 1990.

- S. Shah and F. Palmieri, "MEKA - A Fast Local Algorithm for Training Feedforward Neural Networks," Proceedings of Int. Joint Conference on Neural Networks, San Diego, CA, pp. III 41-46, July 1990.

- F. Palmieri, "An Approach to Faster Backpropagation," Published review of the paper "Speeding Up Backpropagation by Gradient Correlation," by D. V. Shreibman and E. M. Norris, Neural Network Review, Vol. 4, No. 1, 1990, p. 23.

- F. Palmieri, S. Shah, "A New Algorithm for Multilayer Perceptrons," Proceedings of the IEEE Int. Conference on Systems Man and Cybernetics, Boston, MA, pp. 427-428, Nov. 1989.

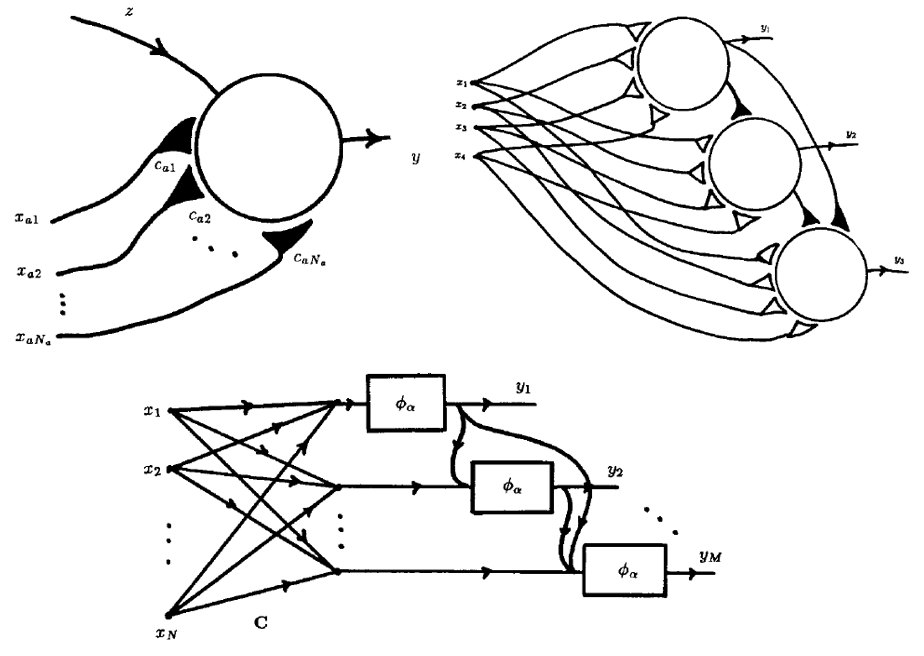

Recurrent Nets

The behavior of a neural network with sigmoidal non linearities with feedbacks, becomes dynamic and may very complicated to analyze. We show how the forward and the lateral weights of the network can be computed to provide a controlled behavior.

- A. Budillon, M. Corrente and F. Palmieri, "How a Neural Network Can Discover Gaussian Clusters," Proceedings of the International ICSC Workshop on Independence and Artificial Neural Networks, I&ANN'98, University of Laguna, Tenerife, Spain, ISBN: 3-906454-13-4, pp. 59-63, Feb. 1998.

- A. Budillon, M. Corrente and F. Palmieri, "EM Algorithm: A Neural Network View," Proceedings of the 9th Italian Workshop on Neural Nets, Springer Verlag Ed., Vietri s.m., SA, Italy, pp. 285-292, May 1997.

- A. Budillon, M. Corrente and F. Palmieri, "A Dynamic Neural Network for Approximating Gaussian Posterior Probabilities," Internal Technical Report, Dip. di Ing. Elettronica e delle Telecomunicazioni, Univ. di Napoli "Federico II", via Claudio 21, Napoli, Italy, 1998.

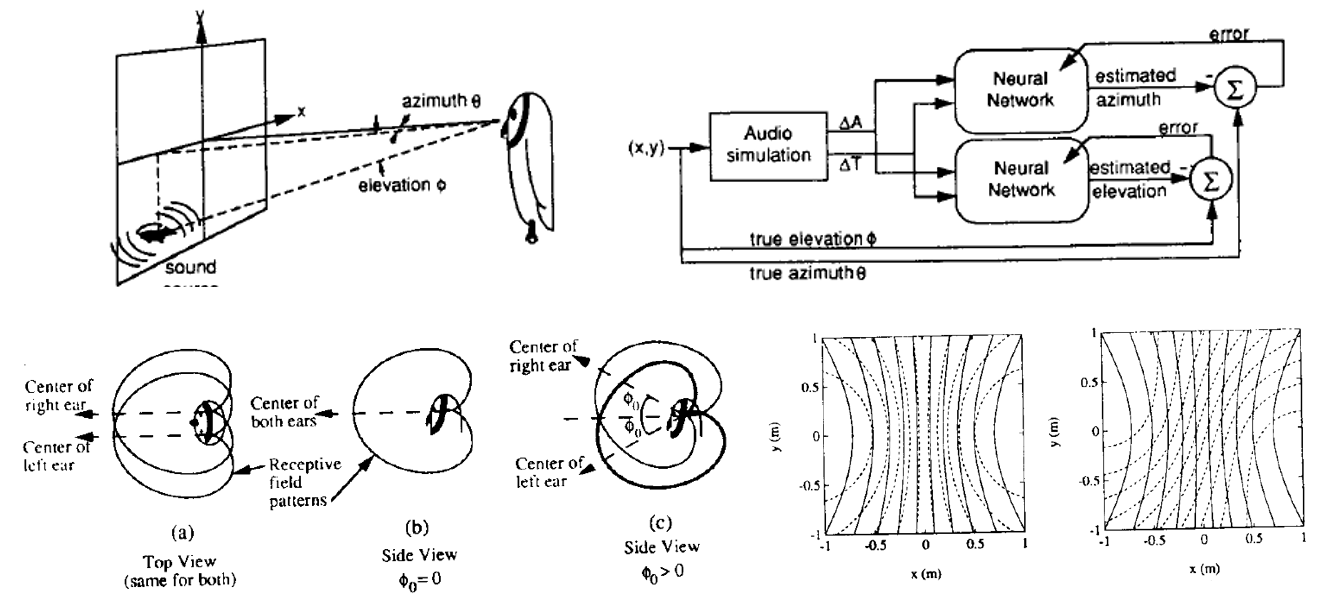

Neural Networks for Sound Localization

Inspired by the capability of the barn owl to locate objects with great precision only from sound, we have analized the binaural sound localization problem using neural networks.

- M. Datum, F. Palmieri and A. Moiseff, ''An Artificial Neural Network for Sound Localization Using Binaural Cues," in The Journal of the Acoustical Society of America, Vol. 100, N. 1, pp. 372-383, July 1996.

- F. Palmieri, A. Shah, A. Moiseff, "Neural Coding of Interaural Time Difference," Proc. of IEEE Int. Joint Conference on Neural Networks, Baltimore, MD, pp. IV 271-276, June 1992.

- F. Palmieri, M. Datum, A. Shah and A. Moiseff, "Sound Localization with a Neural Network Trained with the Multiple extended Kalman Algorithm," Proceedings of International Joint Conference on Neural Networks, Seattle, pp. I 125-131, July 1991.

- A. Moiseff, F. Palmieri, M. Datum and a. Shah, ''An Artificial Neural Network for Studying Binaural Sound Localization," Proceedings of the IEEE 17th Annual Northeast Bioengineering Conference, Hartford, CT, pp.1-2, April 1991.

- F. Palmieri, A. Moiseff, M. Datum and A.Shah, ''Learning Binaural Sound Localization Though a Neural Network," Proceedings of the IEEE 17th Annual Northeast Bioengineering Conference, Hartford, CT, pp. 13-14, April 1991.

- F. Palmieri, M. Datum, A. Shah, A. Moiseff, "Application of a Neural Network Trained with the Multiple Extended Kalman Algorithm," Proceedings of the IEEE Third Biennal Acoustics, Speech and Signal Processing Mini Conference, Weston, MA, pp. S16.1-2, April 1991.

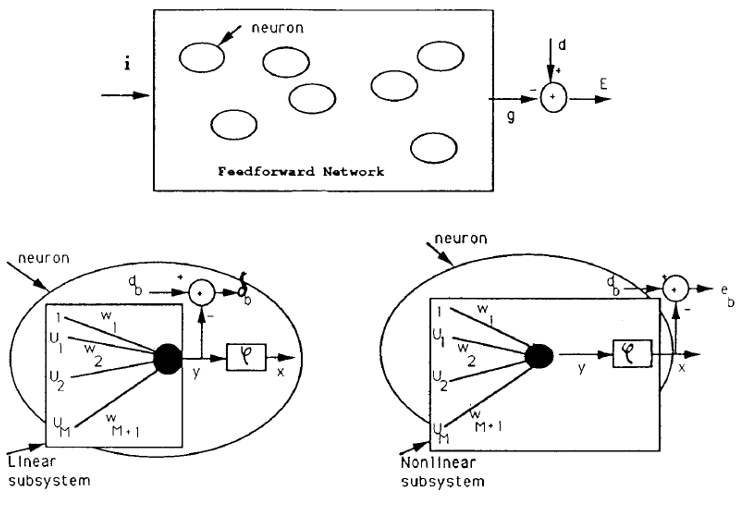

Hebbian Learning

The postulate of Hebb's that says that sysnapses are strenghtened by how much they are able to stimulate their target neurons, has been applied to artificial neural networks in deriving Hebbian rules for learning the network paraments from examples.

- F. Palmieri, J. Zhu, "Self-Association and Hebbian Learning in Linear Neural Networks," in IEEE Trans. on Neural Networks, Vol. 6, N. 5, pp. 1165-1183, Sept. 1995.

- F. Palmieri, "The Anti-Hebbian Synapse in a Nonlinear Neural Network," Proceeedings of WIRN '95, VII Italian Workshop on Neural Nets, Vietri s.m., SA, Italy, pp. 117-122, May 18-20, 1995.

- F. Palmieri, "Hebbian Learning and Self-Association in Nonlinear Neural Networks," (invited paper) Proceedings of IEEE World Congress on Computational Intelligence, Orlando, Florida, June 26-July 2 1994.

- F. Palmieri, "The Analysis of Mixtures of Hebbian and Anti-Hebbian Synapses at a Neural Node," Proceedings of International Conference on Artificial Neural Networks, Sorrento, Italy, pp. 1071-1074, May 26-29, 1994.

- F. Palmieri, J. Zhu and C. Chang, "Anti-Hebbian Learning in Topologically Constrained Linear Neural Networks: a Tutorial," in IEEE Trans. on Neural Networks, Vol. 4, N. 5, 748-761, Sept. 1993.

- F. Palmieri, J. Zhu, "The Behavior of a Single Linear Self-Associative Neuron," Proceedings of World Congress on Neural Networks, Portland, Oregon, July 1993.

- F. Palmieri, J. Zhu, "Hebbian Learning in Linear Neural Networks: A Review," Technical report 5-93, Department of Electrical and Systems Engineering, The University of Connecticut, Storrs, CT, May 1993.

- F. Palmieri and J. Zhu, ''A Comparison of Two Eigen-Networks," Proceedings of International Joint Conference on Neural Networks, Seattle, WA, pp. II 193-199, July 1991.

- F. Palmieri and J. Zhu, ''Linear Neural Networks Which Minimize the Output Variance," Proceedings of International Joint Conference on Neural Networks, Seattle, WA, pp. I 791-797, July 1991.

- F. Palmieri and J. Zhu, ''Unsupervised Learning in Constrained Linear Networks," Proceedings of the IEEE 17th Annual Northeast Bioengineering Conference, Hartford, CT, pp. 9-10, April 1991.

- F. Palmieri, J. Zhu, "Eigenstructure Decomposition in a Cascaded Linear Network," Proceedings of the IEEE Third Biennal Acoustics, Speech and Signal Processing Mini Conference, Weston, MA, pp. F9.1-2, April 1991.

- F. Palmieri, J. Zhu and C. Chang, "Self-Organizing Linear Neural Networks with an Energy Criterion," Technical Report 92-3, Department of Electrical and Systems Engineering, The University of Connecticut, Storrs, CT 06269-3157, Nov. 1991.

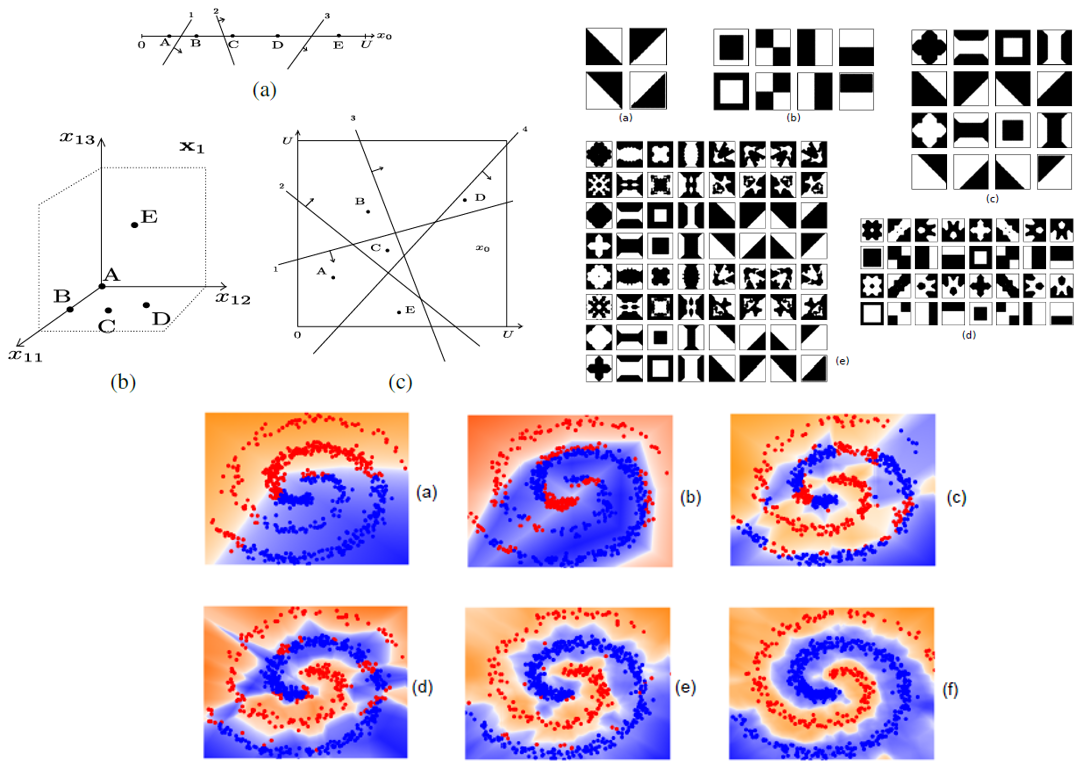

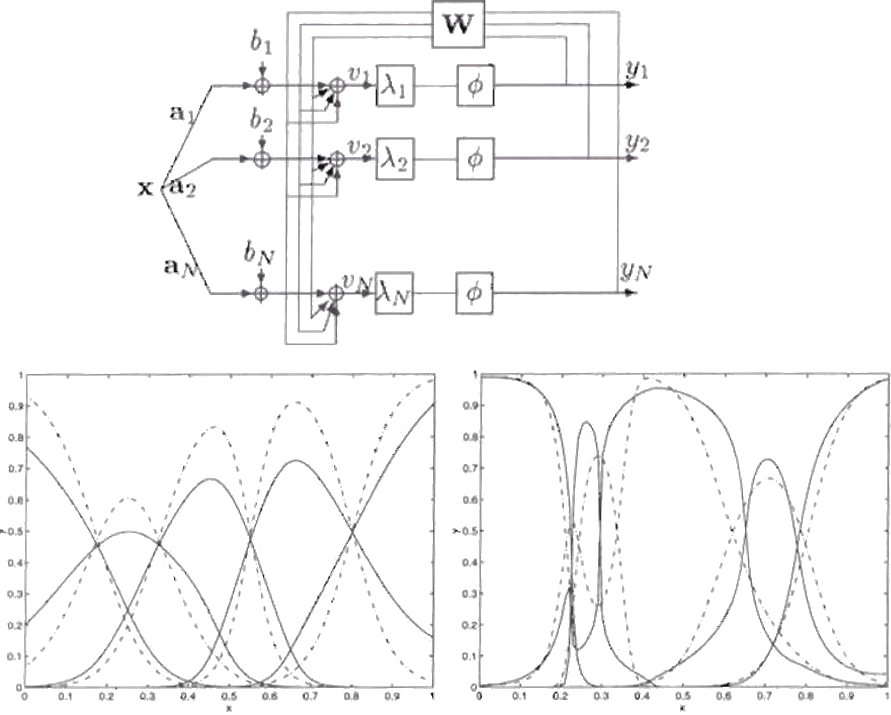

Universal Networks

We refer to a Universal Network as a neural network that can compute progressive embeddings of the input space that can be collected for classification or filtering. We have proposed this paradigm showing how the embeddings can self-organize on the input data and can be used for implementing arbitrarily complex functions.

- F. Palmieri, "A Paradigm for Supervised Learning Without Backpropagation," Proceedings of First Conference on Applications of Artificial Intelligence Techniques in Engineering, Naples, Italy, pp. 79-88, Oct. 5-7, 1994.

- F. Palmieri, "Linear Self-Association for Universal Memory and Approximation," Proceedings of World Congress on Neural Networks, Portland, Oregon, July 1993.

- F. Palmieri, "A Self-Organizing Neural Network for Multidimensional Approximation," Proc. of IEEE International Joint Conference on Neural Networks, 07-11 Jun 1992, Baltimore, MD 1992 (IJCNN 1992), Vol. 4, pp. 802 - 807, DOI: 10.1109/IJCNN.1992.227219, Print ISBN: 0-7803-0559-0.

- F. Palmieri, "A Self-Organizing Neural Network for Nonlinear Filtering," Proc. of IEEE International Symposium on Circuits and Systems, San Diego, CA 10-13 May 1992 (ISCAS '92), Vol. 6, pp. 2629 - 2632, DOI: 10.1109/ISCAS.1992.230681, Print ISBN: 0-7803-0593-0.

- F. Palmieri, "Hebbian Learning for Universal Memory and Approximation," Tech. Report 92-13, Overheads from a talk delivered at the Dept. of Electrical and Computer Engineering, The Johns Hopkins University, Oct. 22, 1992. Department of Electrical and Systems Engineering, The University of Connecticut, Storrs, CT, 1992.

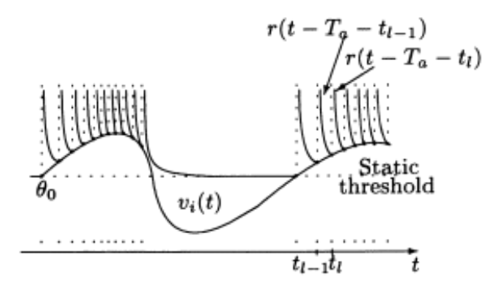

Spiking Neural Networks

We show how to go from a spiking neuron to a sigmoid-based model.

- F. Palmieri, A. Luongo, A. Moiseff, "From Spiking Neurons to Dynamic Perceptrons," Proceedings of the 11th Italian Workshop on Neural Nets, WIRN'99, Vietri s.m., SA, Italy, May 1999.

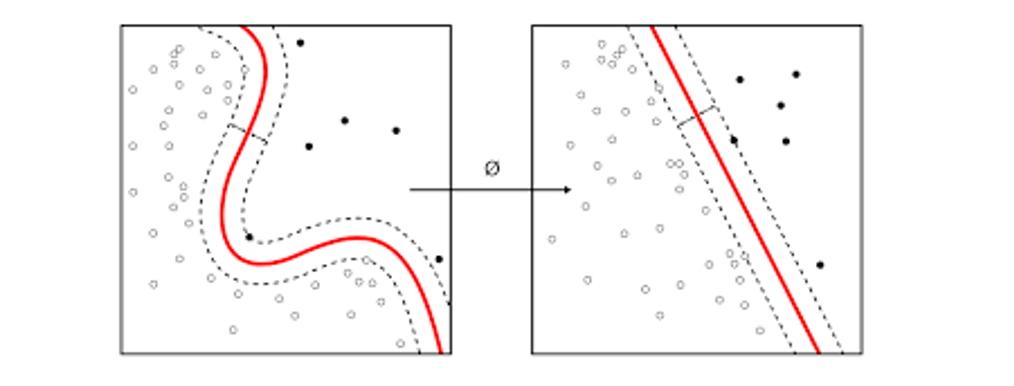

Support Vector Machines (SVM)

We have proposed various approaches to simplify the SVM classifiers.

- D. Mattera, F. Palmieri, S. Haykin, "Simple and Robust Methods for Support Vector Expansions,'' in IEEE Trans. on Neural Networks, vol. 10, n. 5, pp. 1038-1047, sept. 1999.

- D. Mattera, F. Palmieri, S. Haykin, "An explicit algorithm for training support vector machines,'' in IEEE Signal Processing Letters, vol. 6, n. 9, pp. 243-245, sept. 1999.

- D. Mattera, F. Palmieri, S. Haykin, "Generalized Support Vector Machines," Proceedings of European Symposium on Artificial Neural Networks, ESANN'99, Bruge, Belgium, April 1999.

- D. Mattera, F. Palmieri, S. Haykin, "Training Semiparametric Support Vector Machines," Proceedings of the 11th Italian Workshop on Neural Nets, WIRN'99, Vietri s.m., SA, Italy, May 1999.

- D. Mattera, F. Palmieri, S. Haykin, "Adaptive nonlinear filtering with the support vector method,'' in Signal Processing IX - Theory and applications - Proceedings of Eusipco-98 - Ninth Signal Processing Conference, Rhodes, Greece, 8-11 September 1998, S. Theodoridis, I. Pitas, A. Stouraitis, N. Kalouptsidis (eds.), Typorama Editions, Patras, Greece, pp.773-776, 1998.

- D. Mattera, F. Palmieri, "Support Vector Machine for Nonparametric Binary Hypothesis Testing," Proceedings of the 10th Italian Workshop on Neural Nets, Springer Verlag, Vietri s.m., SA, Italy, pp. 132-137, May 1998.

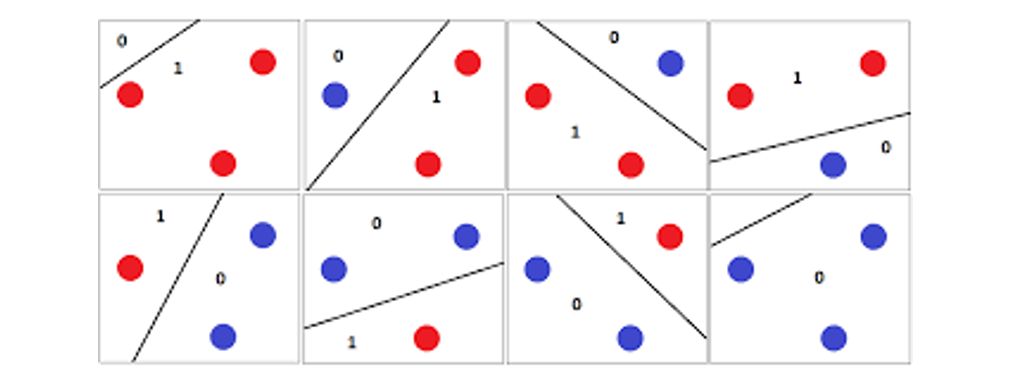

Learning Theory

VC theory predicts the capability of a classifier to genaralize. We have derived bounds and analyses for this paradigm.

- D. Mattera, F. Palmieri, "New Generalization Bounds with a Non-Null Training Error,'' Internal Technical Report, Dip. di Ing. Elettronica e delle Telecomunicazioni, Univ. di Napoli ``Federico II", via Claudio 21, 80125 Napoli (Italy), 1998.

- D. Mattera and F. Palmieri, "Improvement of a Bound in Learning Binary Functions,'' Internal Technical Report, Dip. di Ing. Elettronica e delle Telecomunicazioni, Univ. di Napoli ``Federico II", via Claudio 21, 80125 Napoli (Italy), 1998.

- D. Mattera, F. Palmieri, "New Bounds for Correct Generalization," Proceedings of IEEE International Conference on Neural Networks, Houston, TX, pp. 1051-1055, June 1997.

- D. Mattera, F. Palmieri, "A Distribution-Free VC-Dimension-Based Performance Bound," Proceedings of the 9th Italian Workshop on Neural Nets, Springer Verlag Ed., Vietri s.m., SA, Italy, pp. 162-168, May 1997.

- F. Palmieri, D. Mattera, "The Computational Neural Map and its Capacity," Proc. of WIRN '96, VIII Italian Worshop on Neural Nets, Vietri s.m., SA, Italy, pp. 137-142, May 23-25, 1996.

- F. Palmieri, D. Mattera, "An Approach to Network Capacity in Continuous-Valued Neural Networks," Proceedings of World Congress on Neural Networks, San Diego, CA, pp. 1003-1006, Sept. 15-18, 1996.